Announcing TransformersPHP: Bring Machine Learning Magic to Your PHP Projects

Introduction

I'm thrilled to announce the release of TransformersPHP, a new library designed to seamlessly integrate machine learning functionalities into PHP projects. As PHP continues to grow and evolve as a language, it opens up the opportunity of using it for advanced features and capabilities outside the regular ones. Recognizing this, I've developed TransformersPHP to bridge the gap between PHP and the powerful AI functionalities traditionally reserved for Python environments.

What is TransformersPHP?

TransformersPHP is a library that aims to make the advanced capabilities of the Python-based Hugging Face's Transformers library accessible to PHP developers. It provides a toolkit for implementing machine learning models for tasks such as text generation, classification, summarization, translation, and more, all within a PHP environment.

At its core, TransformersPHP leverages ONNX Runtime, a high-performance engine for running ONNX (Open Neural Network Exchange) models. This enables PHP developers to utilize a wide range of pre-trained models across 100+ languages, previously only available in Python, thus opening up a can of possibilities for PHP-based applications.

Key Features

- Familiar API: Leverages a similar API to the Python Transformers and Xenova Transformers.js libraries, making it easy to follow any previous guides or tutorials written for those libraries.

- NLP Task Support: Currently supports all NLP tasks including text classification, fill mask, zero-shot classification, question answering, token classification, feature extraction (embeddings), translation, summarization, and text generation.

- Pre-trained Model Support: Provides access to a vast collection of pre-trained models from the Hugging Face Hub.

- Easy Model Download and Management: Includes command-line tools for downloading and managing pre-trained models.

- Custom Model Support: Supports using custom models converted from PyTorch, TensorFlow, or JAX into ONNX format.

Getting Started with TransformersPHP

Prerequisites

Before using TransformersPHP, ensure your system meets the following requirements:

- PHP 8.1 or above

- Composer (obviously)

- PHP FFI extension

- JIT compilation (optional, but recommended for performance improvement)

- Increased memory limit (for advanced tasks like text generation)

Installation

Installation is straightforward with Composer:

composer require codewithkyrian/transformersAfter installation, initialize the package to download the necessary shared libraries for ONNX models:

./vendor/bin/transformers installRemember, the shared libraries are platform-specific so make sure to run the install command on the target platform

where

your code will be executed (eg inside the docker container)

Pre-Download Models

To avoid downloading the model on-the-fly when using it, pre-download the ONNX model weights from the Hugging Face model hub. Use the command-line tool included with the package:

./vendor/bin/transformers download <model_name_or_path> [<task>] [options]For example:

./vendor/bin/transformers download Xenova/mobilebert-uncased-mnli zero-shot-classificationExample Usage

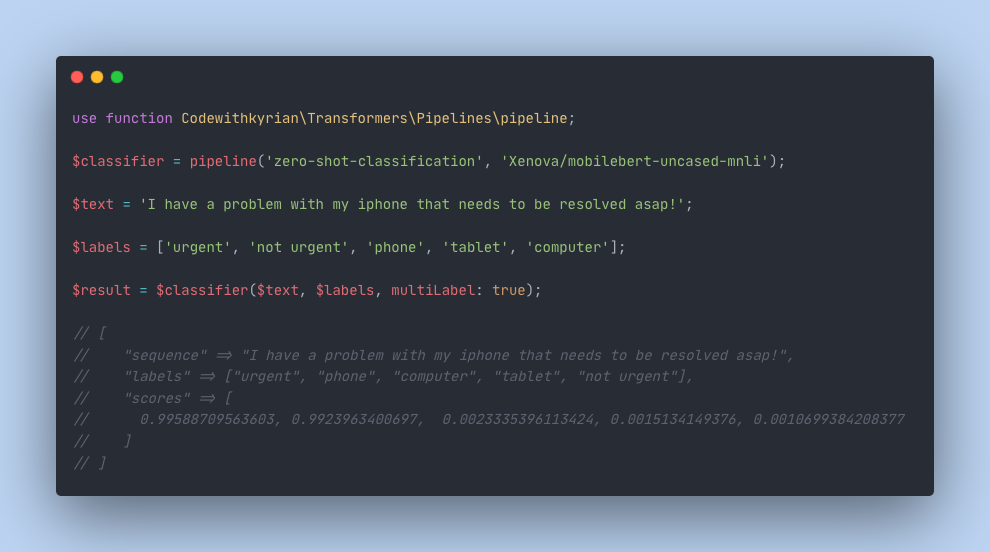

Here's a simple example of how to use TransformersPHP for zero-shot classification:

use function Codewithkyrian\Transformers\Pipelines\pipeline; $classifier = pipeline('zero-shot-classification', 'Xenova/mobilebert-uncased-mnli'); $text = 'I have a problem with my iphone that needs to be resolved asap!'; $labels = ['urgent', 'not urgent', 'phone', 'tablet', 'computer']; $result = $classifier($text, $labels, multiLabel: true);And the output will be this:

[ "sequence" => "I have a problem with my iphone that needs to be resolved asap!", "labels" => ["urgent", "phone", "computer", "tablet", "not urgent"], "scores" => [0.99588709563603, 0.9923963400697, 0.0023335396113424, 0.0015134149376, 0.0010699384208377]]Example 2

Here's another example for another task - token classification

use function Codewithkyrian\Transformers\Pipelines\pipeline; $ner = pipeline('token-classification', 'codewithkyrian/bert-english-uncased-finetuned-pos'); $output = $ner('My name is Kyrian and I live in Onitsha', aggregationStrategy: 'max');And the output will be:

[ ["entity_group" => "PRON", "word" => "my", "score" => 0.99482086393966], ["entity_group" => "NOUN", "word" => "name", "score" => 0.95769686675798], ["entity_group" => "AUX", "word" => "is", "score" => 0.97602109098715], ["entity_group" => "PROPN", "word" => "kyrian", "score" => 0.96583783664597], ["entity_group" => "CCONJ", "word" => "and", "score" => 0.98444884455349], ["entity_group" => "PRON", "word" => "i", "score" => 0.99566682068677], ["entity_group" => "VERB", "word" => "live", "score" => 0.98391136480035], ["entity_group" => "ADP", "word" => "in", "score" => 0.99580186695928], ["entity_group" => "PROPN", "word" => "onitsha", "score" => 0.91250281394515],]Learn More

For detailed information on installation, model conversion, and usage of TransformersPHP, head over to the comprehensive documentation. You can also check out the package GitHub repository and leave some stars ⭐️

I'm excited to see how the PHP community uses TransformersPHP to push the boundaries of what's possible in web development and beyond.

0 Comments

No comments yet. Be the first to comment!

Would you like to say something? Please log in to join the discussion.

Login with GitHub